.webp)

Did you know that AI is actually clueless?

So how does it help us improve surgical practice?

By partnering with X.Y. Han, PhD, Assistant Professor of Operations Management at the University of Chicago Booth School of Business, Surgical Data Science Collective (SDSC) is tackling a fundamental component of surgical artificial intelligence (AI) – getting models to understand what they’re looking at.

Most open-source ML models are trained on data from the outside world: streets, cars, trees, pedestrians. And considering they have never seen a surgical field before, and the visual intricacies of the human body are vastly different to a child losing their football in the street, it would be rather unreasonable to expect them to perform well. Besides, facing a raging pituitary adenoma must be quite the shock after all those hours of simply watching leaves blow in the wind, and the road rage unfold at the local intersection. So, the question stands: how do we build models that understand endoscopy, not traffic?

.webp)

Instead of starting from models trained on street scenes, we use self-supervised learning (SSL) to pre-train models on actual endoscopic video footage. SSL doesn’t need labels – it learns to understand patterns and structure by using the data itself to generate supervisory signals. This allows SDSC to train on hundreds of hours of unlabeled surgical video and extract meaningful representations that serve as a much better foundation for downstream tasks, such as recognizing procedural phases, detecting instruments, or tracking surgeon actions.

But, there’s a catch.

Training of this manner is massively compute-intensive, so using SSL methods (like SimCLR) requires a huge amount of GPU (Graphic Processing Unit) power and memory, making it impractical for many organizations, including ours. Fortunately, as a non-profit, SDSC has the flexibility to collaborate with academic partners like Dr. Han, who has access to a high-performance GPU cluster at the University of Chicago. He uses this resource during a dedicated period where he can pursue impactful research, and we benefit from the models he helps us pre-train.

.png)

This partnership has already paid off. Pre-trained models from Dr. Han’s pipeline have given us a significant boost in projects like ETV/CPC, ALL-SAFE, and procedure breakdown for PTS. Rather than outsetting with generic models trained on irrelevant data, we now start at surgical-native models that dramatically improve performance across the board. In areas like phase recognition, we’ve seen immediate gains – and this is only the beginning.

Perhaps most importantly, this collaboration opens the door to using larger, more sophisticated models – like transformers – that require far more data to be effective. Surgical AI has traditionally been held back by two things: limited data and limited labels. But with SSL and access to processing resources, we can finally scale up. Our growing dataset and pre-trained model library mean we can match – or even surpass – state-of-the-art efforts in other industries.

Few groups in surgical AI are able to do this. We can, because we’ve made the most of our non-profit structure, our clinical network, and our collaborators. We now have the ability to build better models than anyone else, and we’re using that edge to improve surgical outcomes through smarter tools, better analytics, and more powerful insights.

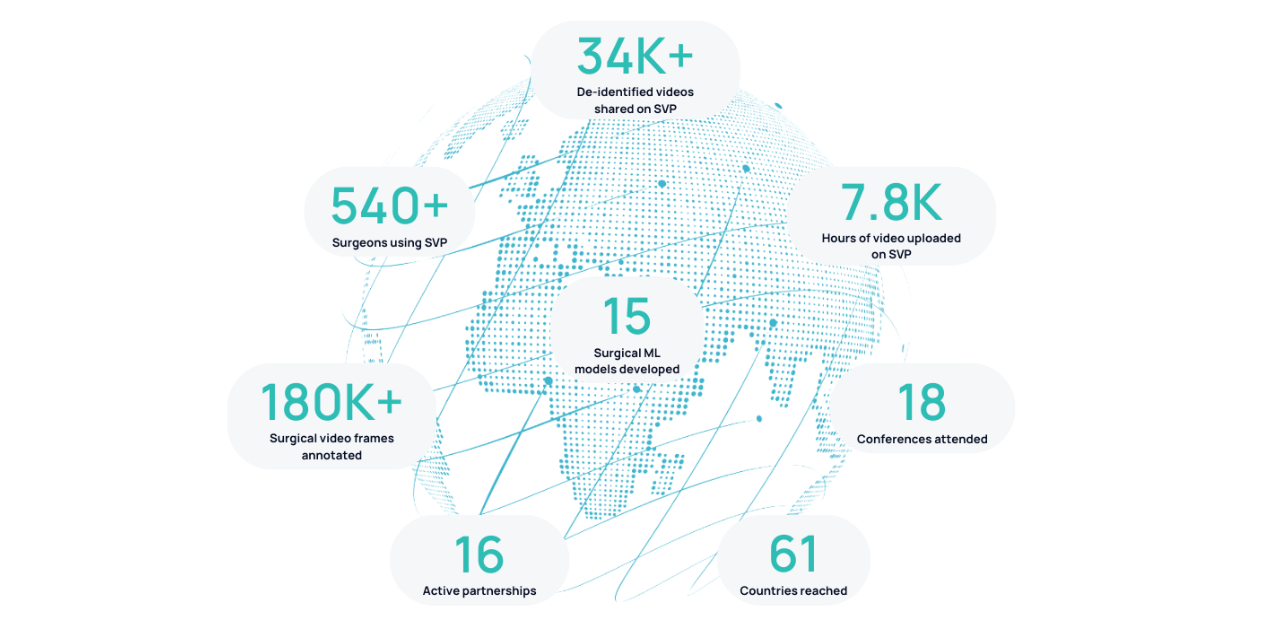

If you’re working with endoscopic video and want to build the next generation of surgical intelligence, we invite you to start at SDSC’s Surgical Video Platform (SVP) – with better models, trained on the right data, and built for your domain.

.png)

.png)