.webp)

Over the past few years, Artificial Intelligence (AI) has made significant strides in the surgical space, offering groundbreaking advancements in surgical education and the analysis of procedures from pre-operative planning to post-operative review. Surgical media contains a wealth of educational insights that can reshape the learning curve for surgeons worldwide, especially in regions where access to educational resources is limited. However, as AI plays an increasingly central role in the global surgical community, ensuring the reliability and accuracy of AI-generated insights is critical to the future of surgical decision-making.

At the recent Congress of Neurological Surgeons (CNS) Annual Meeting, Jack Cook, a Machine Learning Engineer here at SDSC, presented our work on a real-time monitoring system designed to track the performance and data quality of AI models used by our Surgical Video Platform (SVP). His presentation highlighted the essential role this monitoring system plays in maintaining the integrity of AI analysis on the platform, ensuring that the information provided to surgeons and researchers is both reliable and actionable.

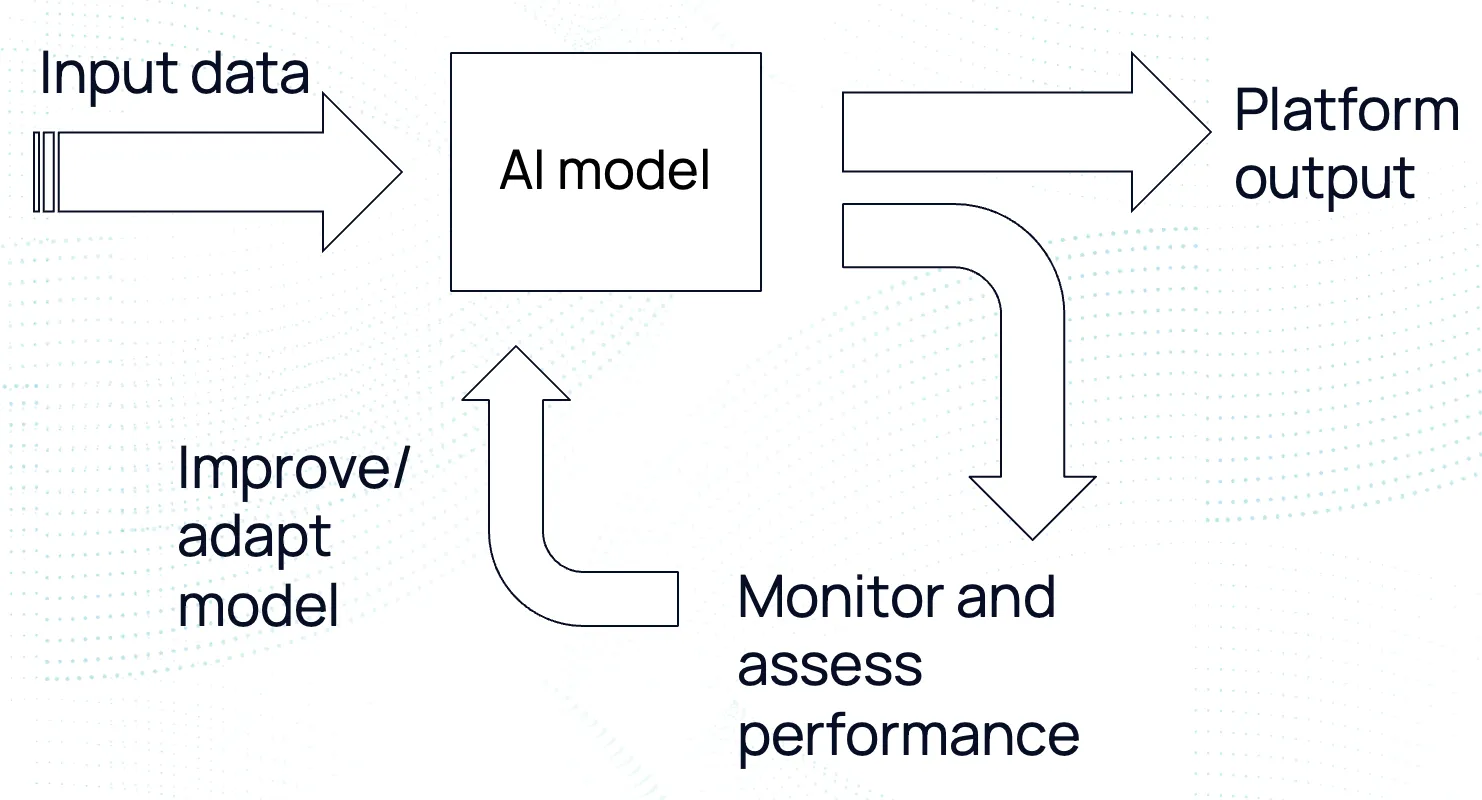

At SDSC, we developed the SVP to securely store and organize copious amounts of surgical footage, while harnessing the full potential of Machine Learning / Computer Vision (ML/CV) models to extract meaningful information. These models are capable of identifying specific tools in the footage, tracking their usage, and recognizing the different phases of a surgery. This data-driven approach provides surgeons with unparalleled insights into their workflows and techniques, enabling them to optimize their performance and enhance patient outcomes.

However, a fundamental challenge arises with these machine learning algorithms: how can we ensure that AI models are performing effectively in real-world scenarios? More importantly, how can we detect discrepancies or errors in the AI's outputs before they impact critical surgical decisions? Jack Cook’s presentation at CNS 2024 focused on how SDSC’s real-time monitoring system is designed to track the performance of SVP’s AI models, flagging any unusual or erroneous frames. This continuous feedback mechanism is essential to ensuring that the platform’s AI models are functioning as expected.

At the heart of SDSC’s proprietary monitoring system are two key mechanisms:

1. Logical Heuristics: These are rules based on fundamental surgical principles. For instance, a surgeon can only use two hands at once, so if the AI model detects three or more tools in use simultaneously, this likely indicates a frame error. Logical inconsistencies like this trigger alerts, allowing engineers and medical experts to review the flagged predictions and make improvements.

2. Surgery-Specific Rules: Each surgical procedure has its own constraints regarding the number and type of tools used during different phases. Certain tools should never appear together, and specific combinations or sequences of tools are standard for different procedures. If the AI model violates these surgery-specific rules, the system flags the frame for review. This level of scrutiny helps to catch more subtle errors that might otherwise go unnoticed.

.webp)

The monitoring system goes beyond basic tool detection. Another AI model is used to assess the “essence” of each tool, analyzing how it compares to similar tools detected in the footage. For example, if the system identifies 100 curettes, but a few of them appear significantly different from the rest, these outliers are flagged as unusual. By applying these models to different circumstances, the monitoring system ensures that any anomalies in tool detection are flagged in real-time, allowing for rapid identification and correction of errors.

When training AI models, the data is typically labeled and classified in a controlled environment, allowing the model to “learn” from these examples. However, once the model is deployed in real-world settings, it no longer has labeled data to reference. Without a feedback loop, it can be difficult to know whether the model is still performing as expected. This is where the monitoring system becomes crucial.

The monitoring system constantly evaluates the model’s performance in different scenarios, identifying areas where the model excels and where it struggles. If a model begins to perform poorly in a certain context, engineers and medical experts can investigate the underlying causes—whether it’s due to differences in the data, unforeseen complexities in the surgical footage, or limitations in the model itself.

This continuous assessment allows for the early detection of issues and ensures that the AI models are constantly improving, even after deployment. If poor detection is identified, the monitoring system sends an automatic alert, prompting the team to review the flagged frames and address any discrepancies. This feedback loop is critical for ensuring that the AI models used in SVP are reliable, adaptable, and safe.

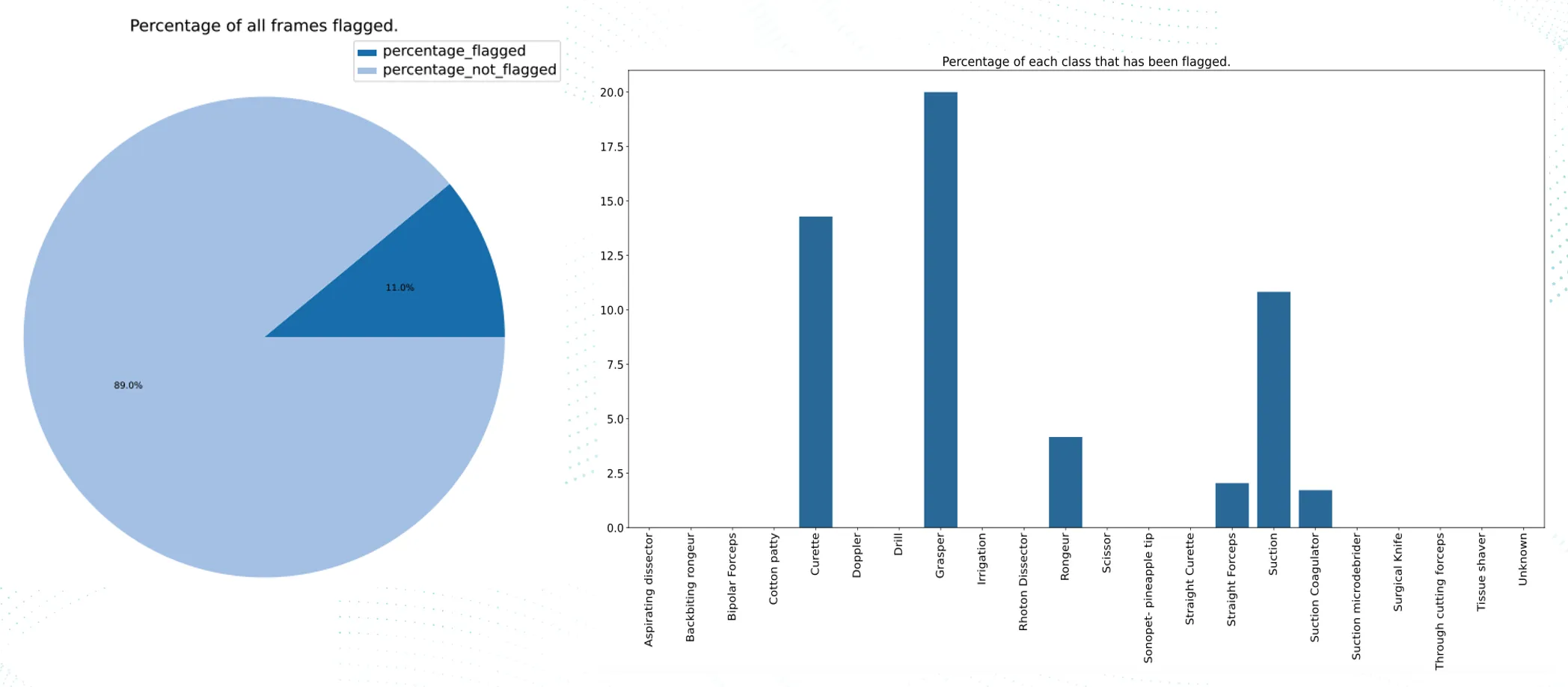

The real-time monitoring system doesn’t just flag potential issues — it also generates detailed reports that provide users with insights into the model’s performance. These reports, automatically sent, include graphs, tables, and screenshots of the flagged frames, allowing surgeons and engineers to see exactly where the AI model might be underperforming. This transparency enables rapid identification and correction of errors, ensuring that the data used in surgical analysis is always of the highest quality. In one test set, for example, 110 frames were flagged by the system, representing 13% of the total footage. Of these flagged frames, 88% required revision, demonstrating the system’s effectiveness in catching significant errors that might otherwise have gone unnoticed.

The work being done at SDSC, as presented by Jack Cook at the CNS Annual Meeting, represents a significant step forward in the application of AI to surgical video analysis. Through the development of a real-time monitoring system for tool detection models, we’re providing surgeons with the confidence they need to rely on AI for critical insights into their procedures. As AI continues to shape the future of surgery, this kind of continuous feedback and improvement will be essential to ensuring that AI-driven tools are both safe and effective. If you’re a surgeon or medical professional interested in harnessing the power of AI to improve surgical outcomes, we invite you to explore the possibilities of our Surgical Video Platform and join us in pushing the boundaries of what’s possible with AI in the operating room.

.png)

.png)