.webp)

For many surgeons, the transition from residency to independent practice can feel like stepping into uncharted territory. In surgical training, feedback often comes only when something goes noticeably wrong, leaving little room for quantifying the small, steady improvements that define surgical proficiency.

Dr. Jacob Young is a neurosurgical attending who completed his training in 2024 at the University of California, San Francisco (UCSF) Department of Neurological Surgery. He sees enormous potential in a structured video-based platform where surgeons can upload operative videos and receive objective performance metrics – akin to Dr. Pamela Peters’ Strava “Athlete Intelligence” analogy.

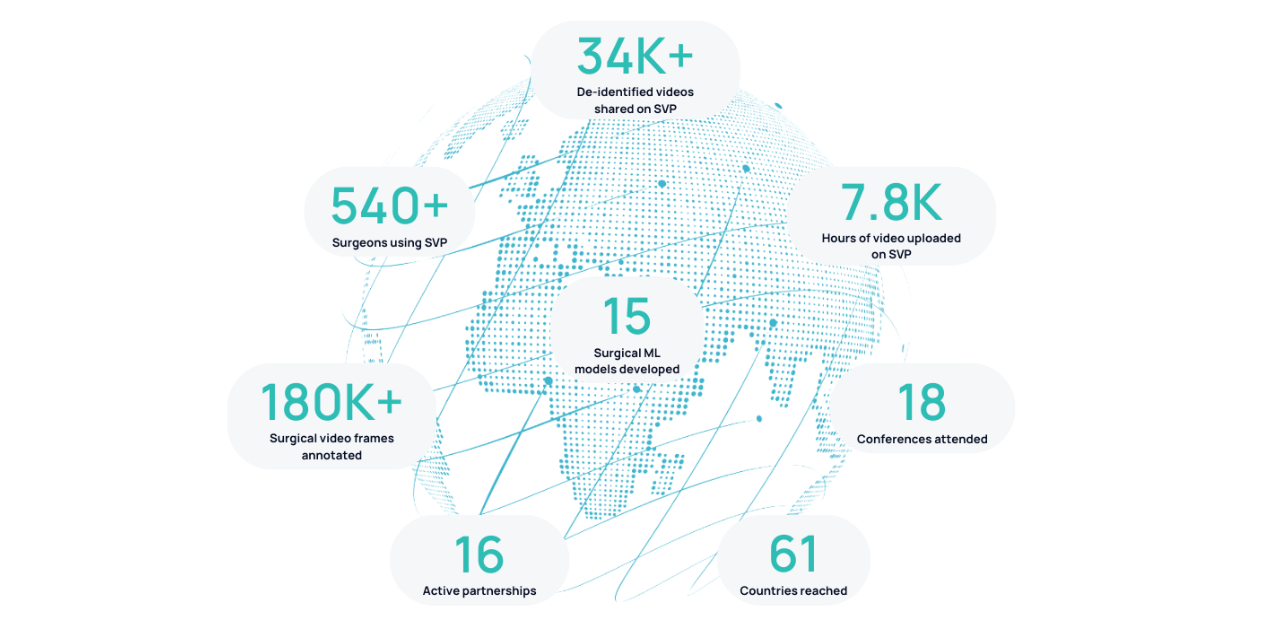

At the 2025 American Association of Neurological Surgeons (AANS) Annual Meeting in Boston, Dr. Young presented two proof-of-concept studies conducted using Surgical Data Science Collective’s (SDSC) Surgical Video Platform (SVP), both of which explored how AI-driven analytics can bridge critical training gaps in neurosurgery.

Field of Focus During Posterior Fossa Craniotomies

Jacob S. Young1, Margaux Masson-Forsythe2, Daniel Donoho2, Kaan Duman2, Philip V. Theodosopoulos1

1Department of Neurological Surgery, University of California, San Francisco

2Surgical Data Science Collective

Microsurgery demands exceptional precision, particularly in neurosurgical procedures like posterior fossa craniotomies where the margin for error is razor-thin. This operation entails opening the back of the skull to remove brain tumors from the posterior fossa – the space containing the cerebellum and brainstem (critical for movement, balance, and other vital functions). Ensuring optimal visualization and a stable field of focus are key to a successful operation, but these are typically subjective, and difficult to judge objectively.

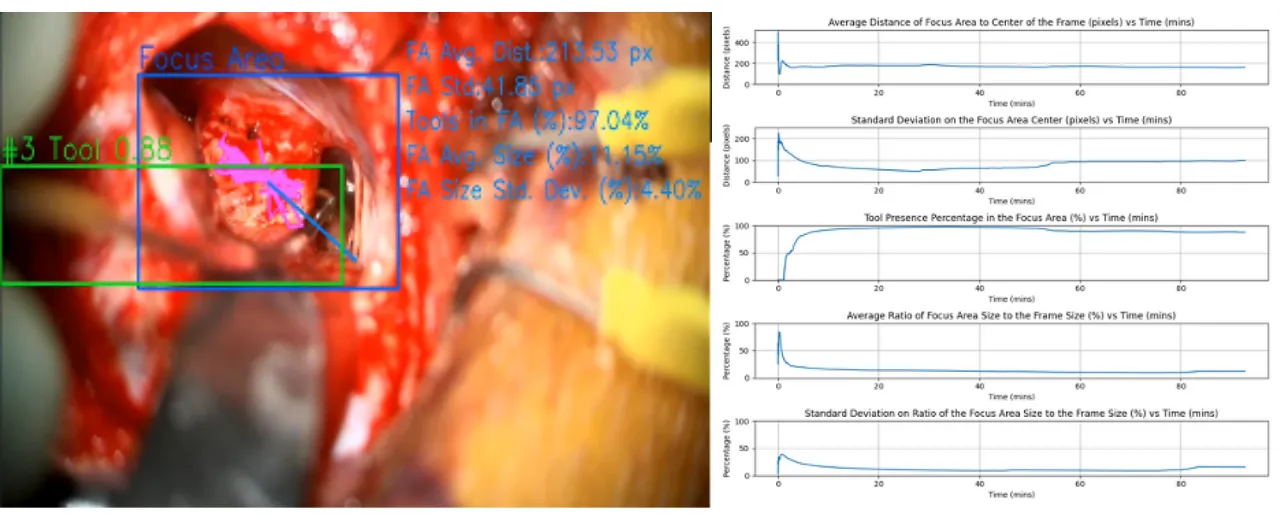

Dr. Young’s first study applied AI to microscopic video footage from retrosigmoid craniotomies performed by a senior surgeon at UCSF by using a spatially varying blur detector to extract the “focus region” of each frame and analyze it across the procedure using SVP.

Key findings:

- The average distance between the focus area center and frame center started at ~200 pixels and narrowed as the operation progressed – suggesting increased stability and precision over time.

- The focus area size, while small relative to the total frame, remained consistent, even during complex phases of surgery.

- Surgical tools remained within the focus zone for more than 85% of the procedure – indicating high alignment between surgeon intent and technical execution.

Why it matters:

By quantifying tool alignment and focus stability and consistency, this AI-driven approach offers a new way to evaluate microsurgical skill. In the future, these metrics could help residents track their progress month-to-month, highlighting both strengths and areas for improvement that might otherwise go unnoticed.

SVP to Quantify Surgical Performance Metrics

Jacob S. Young1, Margaux Masson-Forsythe2, Daniel Donoho2, Kaan Duman2, Philip V. Theodosopoulos1

1Department of Neurological Surgery, University of California, San Francisco

2Surgical Data Science Collective

In his second presentation, Dr. Young tackled a broader challenge: how to objectively measure surgical efficiency. With open surgical cases declining, and resident autonomy shrinking, many neurosurgeons pursue additional fellowship training to feel confident in their skills. Dr. Young believes AI could help with this training gap:

“Anything that can optimize the amount of educational value from each case in residency has the potential to get trainees to a point where they’re ready to practice independently faster.”

Using SVP, his team analyzed microscopic video from six posterior fossa tumor resections, generating thousands of labeled frames to train and test the system. SDSC’s machine learning models tracked tool use, movement patterns, and even moments when instruments crossed paths – all distilled into an overall “efficiency score” for each surgery.

Key Findings:

- The model achieved near perfect accuracy in identifying surgical instruments (mean average precision = 0.988 for tools, 0.994 for retractors).

- Efficiency scores ranged from 0.6 to 1, with higher scores reflecting smoother, more coordinated tool usage.

- Precision-recall and confidence curves showed similar model confidence for tools and retractors – indicating excellent distinction between model predicted tools and retractors used at different stages of the operation.

Why it matters:

This framework transforms subjective judgements into measurable data. By quantifying metrics like tool efficiency, time spent outside the optimal focus field, and unnecessary instrument changes, residents can receive tailored, actionable feedback. Over time, tracking these metrics could reduce the reliance on post-residency fellowships by strengthening skill development earlier in training.

A New Direction for Surgical Training

Both studies highlighted how SDSC’s SVP could reshape neurosurgical education.

Imagine the impact this could make if residents could receive an automatic monthly report showing where they excelled, where they struggle, and how their efficiency compared to last month’s performance.

Even positive reinforcement – rare in the culture of “no feedback is good feedback” – becomes a valuable motivator.

Dr. Young envisions a future where objective, data-driven insights supplement mentorship, making it easier to continue as a learner long after formal training ends. “Having a tool to simply provide things to focus on would be really helpful,” he notes.

By harnessing AI to analyze surgical video, SDSC and its collaborators are pursuing a new generation of smarter, more personalized surgical training. We invite surgeons and surgical educators to apply SVP to their own research projects. Whether you’re exploring efficiency, visualization, or entirely new questions, SVP provides an AI-powered foundation to transform surgical video into meaningful insights.

Click here to submit your research idea and get started with SVP.

.png)

.png)